Creating a PostgreSQL Cluster with Kubernetes CRDs

Jonathan S. Katz

8 min readMore by this author

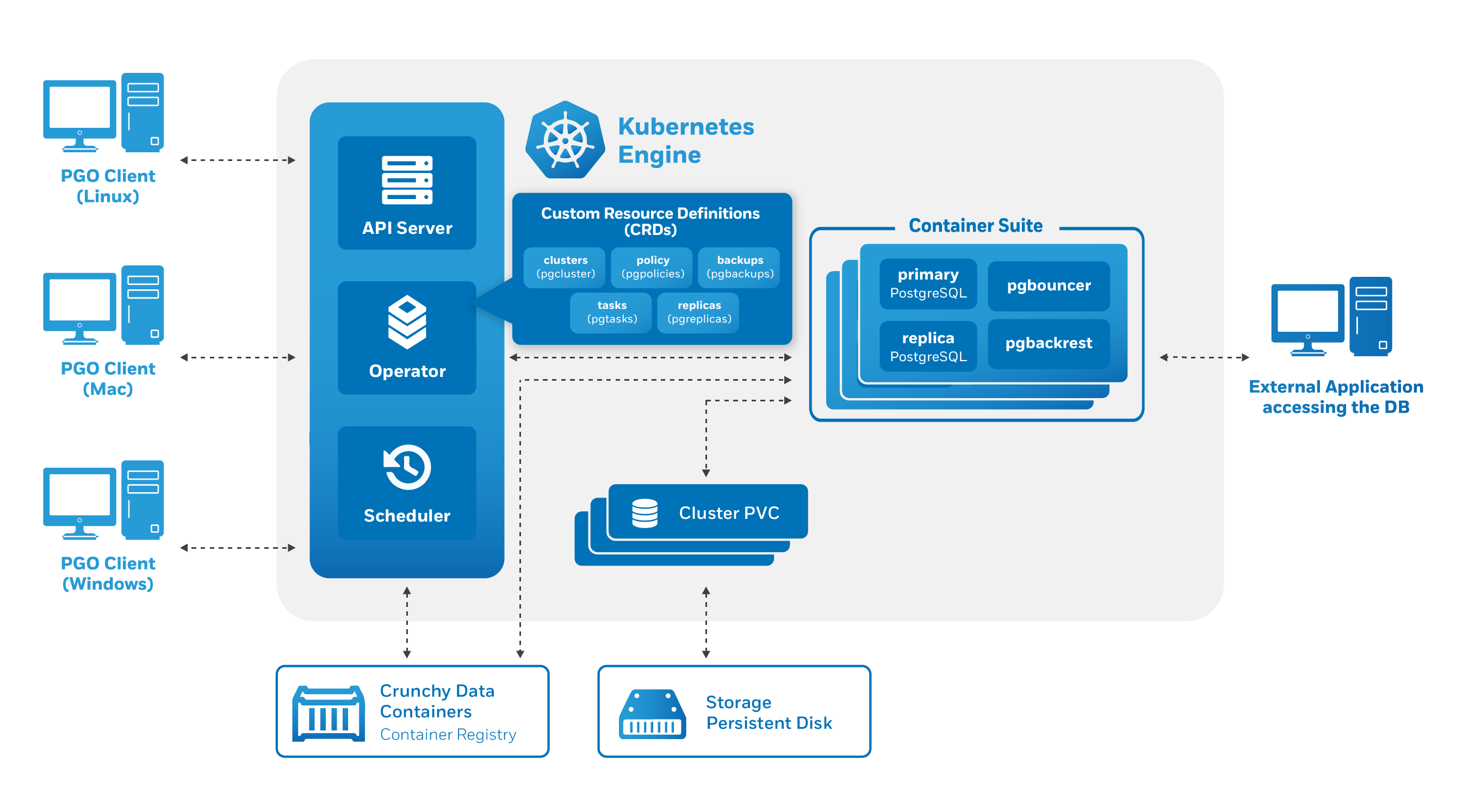

The PostgreSQL Operator provides users with a few different methods to perform PostgreSQL cluster operations, via:

- a REST API

pgo, PostgreSQL Operator Command Line Interface (CLI)- Directly interfacing with Kubernetes, including various APIs and custom resource definitions (CRDs).

While the REST API and pgo provide many conveniences, there are use cases that require the third method, i.e. interacting directly with Kubernetes to create the desired PostgreSQL cluster setup.

To demonstrate this, let's look at how we can manipulate the CRD responsible for managing PostgreSQL clusters to create a new cluster managed by the PostgreSQL Operator.

Background

The PostgreSQL Operator defines the following series of Kubernetes Custom Resource Definitions (CRDs). Each of these CRDs perform specific PostgreSQL management operations that are common for managing the full lifecycle of a database cluster, such as provisioning, scaling, taking backups, etc.

The CRDs contain metadata about each PostgreSQL cluster that is managed by the Operator and act as the "source of truth" for Kubernetes in terms of the overall state of the components that make up the clusters.

The PostgreSQL Operator defines the following CRDs:

- Cluster (pgclusters) defines the key attributes need to run PostgreSQL cluster, such as CPU/RAM/disk size, and lets Kubernetes know which clusters should exist.

- Tasks (pgtask) tracks administrative tasks and allows a user of the Operator to track the state of certain operations.

- Replica (pgreplica) stores the definition for replicas of a particular PostgreSQL cluster.

- Policies (pgpolicies) is used for custom policy files (e.g. for RLS, or any type of custom SQL file).

- Backup (pgbackups) tracks the status of a backup as well as its progress.

Creating a Cluster Directly with a CRD

To create a cluster directly with the pgcluster CRD, you first need to install the PostgreSQL Operator in your Kubernetes environment. There are several ways to install the PostgreSQL Operator, including by following our HOWTO on installing the PostgreSQL Operator in GKE. This article is using version 4.0.1.

The PostgreSQL Operator repository provides an example for how to create a cluster by directly interfacing with a CRD. We will be mostly following this example, but detail what each step entails.

In order to successfully create a PostgreSQL cluster using the Kubernetes CRD, we will need to do the following:

- Define several Kubernetes secrets that will store passwords needed for PostgreSQL

- Generate SSH keys that will be used by the backup management tool pgBackRest, which is used by the cluster

- Edit the configuration file for the new PostgreSQL cluster that will be applied to the pgcluster CRD

- Add all this information to Kubernetes!

Without further ado, let's begin!

Step 0: The Operator Environment

There are a few assumptions made about the PostgreSQL Operator operating environment (no pun intended) when following this example:

- We are using version 4.0.1 of the PostgreSQL Operator

- We are using a single namespace called pgo; this is great for a development environment as you only need a single namespace for everything

- We will call our cluster crdcluster

Step 1: Create PostgreSQL Passwords

PostgreSQL passwords are not stored in the pgcluster CRD, but rather in Kubernetes secrets and are subsequently loaded into the deployed pods. Each secret is base64 encoded. For example, if you want to base64 encode the string password, you can do the following from the command line:

echo -n "password" | base64

which will output the value cGFzc3dvcmQ=.

The PostgreSQL Operator requires three user account & password combinations to be made for a cluster:

- postgres - this is the PostgreSQL superuser for the cluster.

- primaryuser - this is meant to be for the primary user for the cluster

- user - a third user account that can connect to the cluster. In this example, we will call this user myuser.

On the machine you are running kubectl from, create three files called postgres-secret.yml, primaryuser-secret.yml, and myuser-secret.yml. Set each user to have the password password (in a production environment, you would set up different passwords for each).

For this example, the postgres-secret.yml file should look like this:

apiVersion: v1

data:

password: cGFzc3dvcmQ=

username: cG9zdGdyZXM=

kind: Secret

metadata:

labels:

pg-cluster: crdcluster

name: crdcluster-postgres-secret

namespace: pgo

type: Opaque

The primaryuser-secret.yml file should look like this:

apiVersion: v1

data:

password: cGFzc3dvcmQ=

username: cHJpbWFyeXVzZXI=

kind: Secret

metadata:

labels:

pg-cluster: crdcluster

name: crdcluster-primaryuser-secret

namespace: pgo

type: Opaque

and for myuser-secret.yml create a file that looks like this:

apiVersion: v1

data:

password: cGFzc3dvcmQ=

username: bXl1c2Vy

kind: Secret

metadata:

labels:

pg-cluster: crdcluster

name: crdcluster-myuser-secret

namespace: pgo

type: Opaque

Step 2: Generate SSH Keys for pgBackRest

The integration with pgBackRest relies upon having the appropriate sshd configuration as well as a public/private SSH keypair stored in a Kubernetes secret. We can use the default configuration files that come with the PostgreSQL Operator, but we will need to generate a unique SSH keypair and encode it in base64.

Generate a SSH keypair with the following command:

ssh-keygen -f crdcluster-key - N ''

Get the base64 encoded values for each key, which you can do with the following commands:

base64 -i crdcluster-key

base64 -i crdcluster-key.pub

Create a secret file called backrest-repo-config.yml using the example below. Copy and paste the base64 encoded values in crdcluster-key and crdcluster-key.pub in their respective places (the crdcluster-key base64 value goes in the id_rsa and ssh_host_rsa_key attributes, and the crdcluster-key.pub value goes in the authorized_keys attribute).

apiVersion: v1

data:

authorized_keys: <crdcluster-key.pub>

id_rsa: <crdcluster-key>

ssh_host_rsa_key: <crdcluster-key>

config: SG9zdCAqCglTdHJpY3RIb3N0S2V5Q2hlY2tpbmcgbm8KCUlkZW50aXR5RmlsZSAvdG1wL2lkX3JzYQoJUG9ydCAyMDIyCglVc2VyIHBnYmFja3Jlc3QK

sshd_config: IwkkT3BlbkJTRDogc3NoZF9jb25maWcsdiAxLjEwMCAyMDE2LzA4LzE1IDEyOjMyOjA0IG5hZGR5IEV4cCAkCgojIFRoaXMgaXMgdGhlIHNzaGQgc2VydmVyIHN5c3RlbS13aWRlIGNvbmZpZ3VyYXRpb24gZmlsZS4gIFNlZQojIHNzaGRfY29uZmlnKDUpIGZvciBtb3JlIGluZm9ybWF0aW9uLgoKIyBUaGlzIHNzaGQgd2FzIGNvbXBpbGVkIHdpdGggUEFUSD0vdXNyL2xvY2FsL2JpbjovdXNyL2JpbgoKIyBUaGUgc3RyYXRlZ3kgdXNlZCBmb3Igb3B0aW9ucyBpbiB0aGUgZGVmYXVsdCBzc2hkX2NvbmZpZyBzaGlwcGVkIHdpdGgKIyBPcGVuU1NIIGlzIHRvIHNwZWNpZnkgb3B0aW9ucyB3aXRoIHRoZWlyIGRlZmF1bHQgdmFsdWUgd2hlcmUKIyBwb3NzaWJsZSwgYnV0IGxlYXZlIHRoZW0gY29tbWVudGVkLiAgVW5jb21tZW50ZWQgb3B0aW9ucyBvdmVycmlkZSB0aGUKIyBkZWZhdWx0IHZhbHVlLgoKIyBJZiB5b3Ugd2FudCB0byBjaGFuZ2UgdGhlIHBvcnQgb24gYSBTRUxpbnV4IHN5c3RlbSwgeW91IGhhdmUgdG8gdGVsbAojIFNFTGludXggYWJvdXQgdGhpcyBjaGFuZ2UuCiMgc2VtYW5hZ2UgcG9ydCAtYSAtdCBzc2hfcG9ydF90IC1wIHRjcCAjUE9SVE5VTUJFUgojClBvcnQgMjAyMgojQWRkcmVzc0ZhbWlseSBhbnkKI0xpc3RlbkFkZHJlc3MgMC4wLjAuMAojTGlzdGVuQWRkcmVzcyA6OgoKSG9zdEtleSAvc3NoZC9zc2hfaG9zdF9yc2Ffa2V5CiNIb3N0S2V5IC9zc2hkL3NzaF9ob3N0X2VjZHNhX2tleQojSG9zdEtleSAvc3NoZC9zc2hfaG9zdF9lZDI1NTE5X2tleQoKIyBDaXBoZXJzIGFuZCBrZXlpbmcKI1Jla2V5TGltaXQgZGVmYXVsdCBub25lCgojIExvZ2dpbmcKI1N5c2xvZ0ZhY2lsaXR5IEFVVEgKU3lzbG9nRmFjaWxpdHkgQVVUSFBSSVYKI0xvZ0xldmVsIElORk8KCiMgQXV0aGVudGljYXRpb246CgojTG9naW5HcmFjZVRpbWUgMm0KUGVybWl0Um9vdExvZ2luIG5vClN0cmljdE1vZGVzIG5vCiNNYXhBdXRoVHJpZXMgNgojTWF4U2Vzc2lvbnMgMTAKClB1YmtleUF1dGhlbnRpY2F0aW9uIHllcwoKIyBUaGUgZGVmYXVsdCBpcyB0byBjaGVjayBib3RoIC5zc2gvYXV0aG9yaXplZF9rZXlzIGFuZCAuc3NoL2F1dGhvcml6ZWRfa2V5czIKIyBidXQgdGhpcyBpcyBvdmVycmlkZGVuIHNvIGluc3RhbGxhdGlvbnMgd2lsbCBvbmx5IGNoZWNrIC5zc2gvYXV0aG9yaXplZF9rZXlzCiNBdXRob3JpemVkS2V5c0ZpbGUJL3BnY29uZi9hdXRob3JpemVkX2tleXMKQXV0aG9yaXplZEtleXNGaWxlCS9zc2hkL2F1dGhvcml6ZWRfa2V5cwoKI0F1dGhvcml6ZWRQcmluY2lwYWxzRmlsZSBub25lCgojQXV0aG9yaXplZEtleXNDb21tYW5kIG5vbmUKI0F1dGhvcml6ZWRLZXlzQ29tbWFuZFVzZXIgbm9ib2R5CgojIEZvciB0aGlzIHRvIHdvcmsgeW91IHdpbGwgYWxzbyBuZWVkIGhvc3Qga2V5cyBpbiAvZXRjL3NzaC9zc2hfa25vd25faG9zdHMKI0hvc3RiYXNlZEF1dGhlbnRpY2F0aW9uIG5vCiMgQ2hhbmdlIHRvIHllcyBpZiB5b3UgZG9uJ3QgdHJ1c3Qgfi8uc3NoL2tub3duX2hvc3RzIGZvcgojIEhvc3RiYXNlZEF1dGhlbnRpY2F0aW9uCiNJZ25vcmVVc2VyS25vd25Ib3N0cyBubwojIERvbid0IHJlYWQgdGhlIHVzZXIncyB+Ly5yaG9zdHMgYW5kIH4vLnNob3N0cyBmaWxlcwojSWdub3JlUmhvc3RzIHllcwoKIyBUbyBkaXNhYmxlIHR1bm5lbGVkIGNsZWFyIHRleHQgcGFzc3dvcmRzLCBjaGFuZ2UgdG8gbm8gaGVyZSEKI1Bhc3N3b3JkQXV0aGVudGljYXRpb24geWVzCiNQZXJtaXRFbXB0eVBhc3N3b3JkcyBubwpQYXNzd29yZEF1dGhlbnRpY2F0aW9uIG5vCgojIENoYW5nZSB0byBubyB0byBkaXNhYmxlIHMva2V5IHBhc3N3b3JkcwpDaGFsbGVuZ2VSZXNwb25zZUF1dGhlbnRpY2F0aW9uIHllcwojQ2hhbGxlbmdlUmVzcG9uc2VBdXRoZW50aWNhdGlvbiBubwoKIyBLZXJiZXJvcyBvcHRpb25zCiNLZXJiZXJvc0F1dGhlbnRpY2F0aW9uIG5vCiNLZXJiZXJvc09yTG9jYWxQYXNzd2QgeWVzCiNLZXJiZXJvc1RpY2tldENsZWFudXAgeWVzCiNLZXJiZXJvc0dldEFGU1Rva2VuIG5vCiNLZXJiZXJvc1VzZUt1c2Vyb2sgeWVzCgojIEdTU0FQSSBvcHRpb25zCiNHU1NBUElBdXRoZW50aWNhdGlvbiB5ZXMKI0dTU0FQSUNsZWFudXBDcmVkZW50aWFscyBubwojR1NTQVBJU3RyaWN0QWNjZXB0b3JDaGVjayB5ZXMKI0dTU0FQSUtleUV4Y2hhbmdlIG5vCiNHU1NBUElFbmFibGVrNXVzZXJzIG5vCgojIFNldCB0aGlzIHRvICd5ZXMnIHRvIGVuYWJsZSBQQU0gYXV0aGVudGljYXRpb24sIGFjY291bnQgcHJvY2Vzc2luZywKIyBhbmQgc2Vzc2lvbiBwcm9jZXNzaW5nLiBJZiB0aGlzIGlzIGVuYWJsZWQsIFBBTSBhdXRoZW50aWNhdGlvbiB3aWxsCiMgYmUgYWxsb3dlZCB0aHJvdWdoIHRoZSBDaGFsbGVuZ2VSZXNwb25zZUF1dGhlbnRpY2F0aW9uIGFuZAojIFBhc3N3b3JkQXV0aGVudGljYXRpb24uICBEZXBlbmRpbmcgb24geW91ciBQQU0gY29uZmlndXJhdGlvbiwKIyBQQU0gYXV0aGVudGljYXRpb24gdmlhIENoYWxsZW5nZVJlc3BvbnNlQXV0aGVudGljYXRpb24gbWF5IGJ5cGFzcwojIHRoZSBzZXR0aW5nIG9mICJQZXJtaXRSb290TG9naW4gd2l0aG91dC1wYXNzd29yZCIuCiMgSWYgeW91IGp1c3Qgd2FudCB0aGUgUEFNIGFjY291bnQgYW5kIHNlc3Npb24gY2hlY2tzIHRvIHJ1biB3aXRob3V0CiMgUEFNIGF1dGhlbnRpY2F0aW9uLCB0aGVuIGVuYWJsZSB0aGlzIGJ1dCBzZXQgUGFzc3dvcmRBdXRoZW50aWNhdGlvbgojIGFuZCBDaGFsbGVuZ2VSZXNwb25zZUF1dGhlbnRpY2F0aW9uIHRvICdubycuCiMgV0FSTklORzogJ1VzZVBBTSBubycgaXMgbm90IHN1cHBvcnRlZCBpbiBSZWQgSGF0IEVudGVycHJpc2UgTGludXggYW5kIG1heSBjYXVzZSBzZXZlcmFsCiMgcHJvYmxlbXMuClVzZVBBTSBubyAKCiNBbGxvd0FnZW50Rm9yd2FyZGluZyB5ZXMKI0FsbG93VGNwRm9yd2FyZGluZyB5ZXMKI0dhdGV3YXlQb3J0cyBubwpYMTFGb3J3YXJkaW5nIHllcwojWDExRGlzcGxheU9mZnNldCAxMAojWDExVXNlTG9jYWxob3N0IHllcwojUGVybWl0VFRZIHllcwojUHJpbnRNb3RkIHllcwojUHJpbnRMYXN0TG9nIHllcwojVENQS2VlcEFsaXZlIHllcwojVXNlTG9naW4gbm8KVXNlUHJpdmlsZWdlU2VwYXJhdGlvbiBubwojUGVybWl0VXNlckVudmlyb25tZW50IG5vCiNDb21wcmVzc2lvbiBkZWxheWVkCiNDbGllbnRBbGl2ZUludGVydmFsIDAKI0NsaWVudEFsaXZlQ291bnRNYXggMwojU2hvd1BhdGNoTGV2ZWwgbm8KI1VzZUROUyB5ZXMKI1BpZEZpbGUgL3Zhci9ydW4vc3NoZC5waWQKI01heFN0YXJ0dXBzIDEwOjMwOjEwMAojUGVybWl0VHVubmVsIG5vCiNDaHJvb3REaXJlY3Rvcnkgbm9uZQojVmVyc2lvbkFkZGVuZHVtIG5vbmUKCiMgbm8gZGVmYXVsdCBiYW5uZXIgcGF0aAojQmFubmVyIG5vbmUKCiMgQWNjZXB0IGxvY2FsZS1yZWxhdGVkIGVudmlyb25tZW50IHZhcmlhYmxlcwpBY2NlcHRFbnYgTEFORyBMQ19DVFlQRSBMQ19OVU1FUklDIExDX1RJTUUgTENfQ09MTEFURSBMQ19NT05FVEFSWSBMQ19NRVNTQUdFUwpBY2NlcHRFbnYgTENfUEFQRVIgTENfTkFNRSBMQ19BRERSRVNTIExDX1RFTEVQSE9ORSBMQ19NRUFTVVJFTUVOVApBY2NlcHRFbnYgTENfSURFTlRJRklDQVRJT04gTENfQUxMIExBTkdVQUdFCkFjY2VwdEVudiBYTU9ESUZJRVJTCgojIG92ZXJyaWRlIGRlZmF1bHQgb2Ygbm8gc3Vic3lzdGVtcwpTdWJzeXN0ZW0Jc2Z0cAkvdXNyL2xpYmV4ZWMvb3BlbnNzaC9zZnRwLXNlcnZlcgoKIyBFeGFtcGxlIG9mIG92ZXJyaWRpbmcgc2V0dGluZ3Mgb24gYSBwZXItdXNlciBiYXNpcwojTWF0Y2ggVXNlciBhbm9uY3ZzCiMJWDExRm9yd2FyZGluZyBubwojCUFsbG93VGNwRm9yd2FyZGluZyBubwojCVBlcm1pdFRUWSBubwojCUZvcmNlQ29tbWFuZCBjdnMgc2VydmVyCg==

kind: Secret

metadata:

labels:

pg-cluster: crdcluster

pgo-backrest-repo: 'true'

name: crdcluster-backrest-repo-config

namespace: pgo

type: Opaque

Step 3: Create the CRD File

As part of the Operator pattern, the PostgreSQL Operator looks for an entry to be added to the pgcluster CRD. In order to do this, we first need to create a CRD file.

Below is the CRD file, called crdcluster.json, we will use to create the crdcluster. It includes references to the various secrets we created in the previous steps:

{

"apiVersion": "crunchydata.com/v1",

"kind": "Pgcluster",

"metadata": {

"labels": {

"archive": "false",

"archive-timeout": "60",

"crunchy-pgbadger": "false",

"crunchy_collect": "false",

"current-primary": "crdcluster",

"deployment-name": "crdcluster",

"name": "crdcluster",

"pg-cluster": "crdcluster",

"pgo-backrest": "true",

"pgo-version": "4.0.1",

"primary": "true"

},

"name": "crdcluster",

"namespace": "pgo"

},

"spec": {

"ArchiveStorage": {

"accessmode": "",

"fsgroup": "26",

"matchLabels": "",

"name": "",

"size": "",

"storageclass": "standard",

"storagetype": "dynamic",

"supplementalgroups": ""

},

"BackrestStorage": {

"accessmode": "ReadWriteOnce",

"fsgroup": "26",

"matchLabels": "",

"name": "",

"size": "1G",

"storageclass": "standard",

"storagetype": "dynamic",

"supplementalgroups": ""

},

"ContainerResources": {

"limitscpu": "",

"limitsmemory": "",

"requestscpu": "",

"requestsmemory": ""

},

"PrimaryStorage": {

"accessmode": "ReadWriteOnce",

"fsgroup": "26",

"matchLabels": "",

"name": "crdcluster",

"size": "1G",

"storageclass": "standard",

"storagetype": "dynamic",

"supplementalgroups": ""

},

"ReplicaStorage": {

"accessmode": "ReadWriteOnce",

"fsgroup": "26",

"matchLabels": "",

"name": "",

"size": "1G",

"storageclass": "standard",

"storagetype": "dynamic",

"supplementalgroups": ""

},

"backuppath": "",

"backuppvcname": "",

"ccpimage": "crunchy-postgres",

"ccpimagetag": "centos7-11.4-2.4.1",

"clustername": "crdcluster",

"customconfig": "",

"database": "mydb",

"name": "crdcluster",

"namespace": "pgo",

"nodename": "",

"policies": "",

"port": "5432",

"primaryhost": "crdcluster",

"primarysecretname": "crdcluster-primaryuser-secret",

"replicas": "0",

"rootsecretname": "crdcluster-postgres-secret",

"secretfrom": "",

"status": "",

"strategy": "1",

"user": "myuser",

"userlabels": {

"archive": "false",

"archive-timeout": "60",

"crunchy-pgbadger": "false",

"crunchy_collect": "false",

"pgo-backrest": "true",

"pgo-version": "4.0.1"

},

"usersecretname": "crdcluster-myuser-secret"

}

}

Step 4: Create the PostgreSQL Cluster

Now we can create the PostgreSQL cluster! Simply run the following commands:

# create the required postgres credentials for the crdcluster cluster

kubectl -n pgo create -f postgres-secret.yml

kubectl -n pgo create -f primaryuser-secret.yml

kubectl -n pgo create -f myuser-secret.yml

kubectl -n pgo create -f backrest-repo-config.yml

# create the pgcluster CRD for the crdcluster cluster

kubectl -n pgo create -f crdcluster.json

Success, we've created a PostgreSQL cluster in Kubernetes! But how do we know that it was successfully created, and is being managed by the PostgreSQL Operator?

Step 5: Validating the Creation of the PostgreSQL Cluster

There are a few PostgreSQL Operator commands we can use to validate the creation of the cluster. First, to see if the PostgreSQL Operator is aware of crdcluster, you can use pgo show cluster like so:

pgo show cluster -n pgo crdcluster

This command should successfully return and provide information about the current state of the cluster.

To see if the crdcluster is up, running, and available, you can use the pgo test command:

pgo test -n pgo crdcluster

If the cluster is up and running, you should see output similar to the following:

cluster : crdcluster

psql -p 5432 -h 10.0.4.193 -U myuser

postgres is Working

psql -p 5432 -h 10.0.4.193 -U myuser

mydb is Working

psql -p 5432 -h 10.0.4.193 -U postgres

postgres is Working

psql -p 5432 -h 10.0.4.193 -U postgres

mydb is Working

psql -p 5432 -h 10.0.4.193 -U primaryuser

postgres is Working

psql -p 5432 -h 10.0.4.193 -U primaryuser

mydb is Working

If you want to inspect the user credentials that we defined in Step 1 were created and available to the cluster, you can do so with the pgo show user command:

pgo show user -n pgo crdcluster

You should see output similar to:

cluster : crdcluster

secret : crdcluster-myuser-secret

username: myuser

password: password

secret : crdcluster-postgres-secret

username: postgres

password: password

secret : crdcluster-primaryuser-secret

username: primaryuser

password: password

Cleaning Up: How to Delete the Cluster

You can delete the cluster in a few ways, with two methods listed below:

Method 1: Use pgo

The simplest way to delete the cluster is to use the pgo command. The following will delete the cluster and any backups and persistent-volume claims (PVCs) associated with it:

pgo delete cluster -n pgo -b -d crdcluster

Method 2: Deleting Directly from the CRD

The following commands allow you to remove the cluster directly from the CRD. They delete the various secrets, PVCs, and the CRD of the crdcluster itself:

kubectl delete secret -n pgo \

crdcluster-backrest-repo-config \

crdcluster-postgres-secret \

crdcluster-primaryuser-secret \

crdcluster-myuser-secret

kubectl delete pgcluster -n pgo crdcluster

kubectl delete pvc -n pgo crdcluster crdcluster-pgbr-repo

Conclusion

The PostgreSQL Operator provides a lot of out-of-the-box functionality for managing PostgreSQL clusters on Kubernetes. It is also designed to be flexible and recognizes that certain users require the ability to interact directly with Kubernetes CRDs directly to create the desired PostgreSQL cluster to spec. This is another example of how you can use the PostgreSQL Operator to build out database clusters the way that you want to use them for your team.