Latest Articles

PostgreSQL Monitoring for App Developers: Alerts & Troubleshooting

Jonathan S. Katz

10 min readMore by this author

We've seen an example of how to set up PostgreSQL monitoring in Kubernetes. We've looked at two sets of statistics to keep track of it in your PostgreSQL cluster: your vitals (CPU/memory/disk/network) and your DBA fundamentals.

While staring at these charts should help you to anticipate, diagnose, and respond to issues with your Postgres cluster, the odds are that you are not staring at your monitor 24 hours a day. This is where alerts come in: a properly set up alerting system will let you know if you are on the verge of a major issue so you can head it off at the pass (and alerts should also let you know that there is a major issue).

Dealing with operational production issues was a departure from my application developer roots, but I looked at it as an opportunity to learn a new set of troubleshooting skills. It also offered an opportunity to improve communication skills: I would often convey to the team and customers what transpired during a downtime or performance degradation situation (VSSE: be transparent!). Some of what I observed I used to help us to improve the application, while other parts helped me to better understand how PostgreSQL works.

But I digress: let's drill into alerts on your Postgres database.

Note that just because an alert or alarm is going off, it does not mean you need to immediately react: for example, a transient network degradation issue may cause a replica to lag further behind a primary for a bit too long but will clear up when the degradation passes. That said, you typically want to investigate the alert to understand what is causing it.

Additionally, it's important to understand what actions you want to take to solve the problem. For example, a common mistake during an "out-of-disk" error is to delete the PostgreSQL WAL logs with a rm command; doing so can lead to a very bad day (and is also an advertisement for ensuring you have backups).

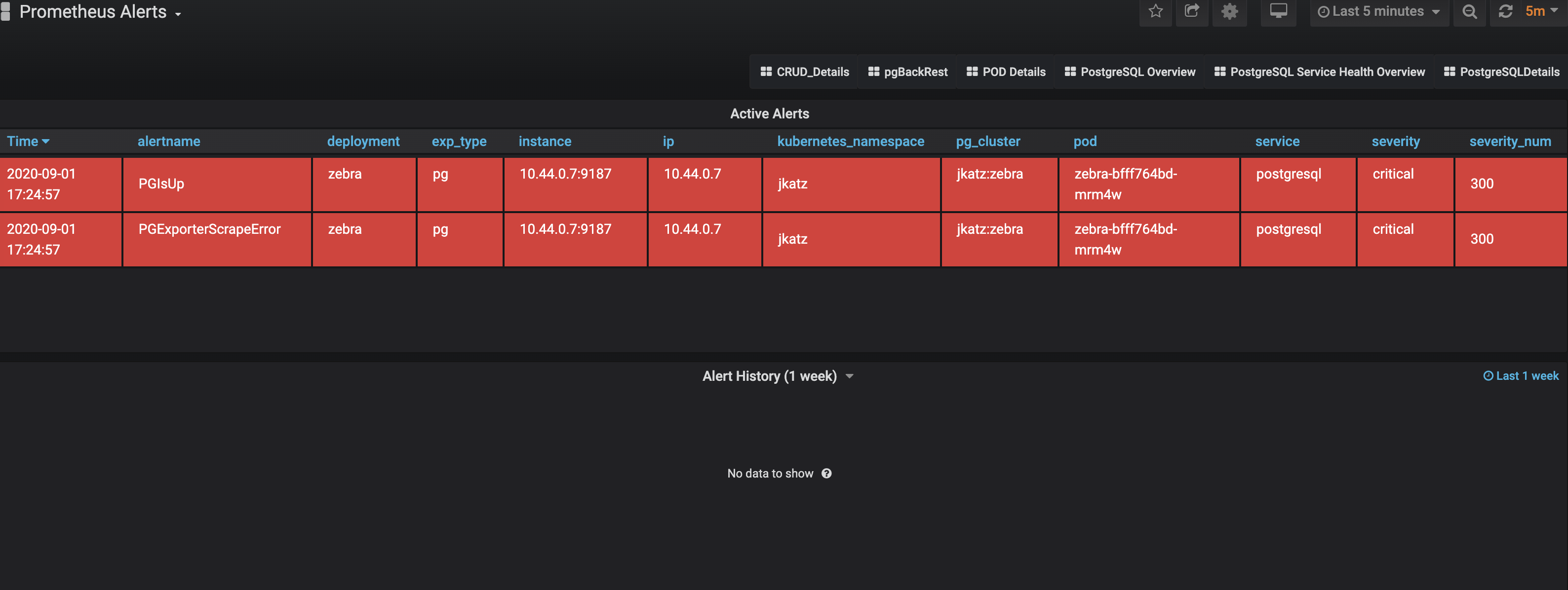

As mentioned in the post on setting up PostgreSQL monitoring in Kubernetes, the Postgres Operator uses pgMonitor for metric collection and visualization via open source projects like Prometheus and Grafana. pgMonitor uses open source Alertmanager for configuring and sending alerts, and is what the PostgreSQL Operator uses.

Using the above, let's dive into some of the items that you should be alerting on, and I will describe how my experience as an app developer translated into troubleshooting strategies.

"Is This Thing On?"

If you choose only one thing to alert on in your PostgreSQL cluster (and as I hope this article makes clear, you should alert on multiple things), it should be availability. If your application is unable to connect or transaction with your database, you're probably in for a bad day.

While it's easy to understand the question "can I connect to my database?", troubleshooting the issue can range from easy to Byzantine. For example, all of these are reasons why your PostgreSQL cluster could be unavailable:

- All of your instances are powered down

- The network is unreachable

- PostgreSQL is stuck in a crash loop

and many more, including some of the alerts we'll cover further on.

Sometimes, availability can be transient: in cloud environments, it's not uncommon that a blip in network connectivity can occur that can momentarily impact availability. You should set your availability alert threshold to a value high enough that will account for these anomalies, so you are only paged for real downtime events.

To troubleshoot, it helps to ask the following questions:

- Can I reach my PostgreSQL instance over the same network my application is using? If not, there could be a problem there.

- Is PostgreSQL on? If not, see if you can start it.

- Is PostgreSQL powering on but crashing? If it is crashing, check the PostgreSQL logs to see if you can determine what's failing.

PostgreSQL being unavailable could also be something that's application driven. For example, PostgreSQL enforces a max_connections parameter that will disallow any connections beyond that number (with some exceptions in newer releases). While PostgreSQL is still up, your application may not be able to connect if the max_connections number is exceeded.

pgMonitor with start issuing alerts when the number of connections gets closer to this number. To troubleshoot, determine what is causing the number of connections to PostgreSQL to increase. If it is because of organic growth (e.g. more application nodes connecting to your database), you may consider increasing max_connections (there are drawbacks to this, though, PostgreSQL 14 makes strides in connection efficiency) or using a connection pooler like pgBouncer.

Availability is a complex topic (and high availability is an even more complex topic), and even working through availability issues can be complicated. However, knowing what questions to ask (which is true for any issue) can help you troubleshoot the root cause of unavailability.

"Am I Out Of Space?"

If you run PostgreSQL long enough, you will run out of space, but not for the reasons you may think! It's normal to think that you run out of space on your disk when your PostgreSQL database is too big for your disk, but, believe it or not, that is not the reason I've ever maxed out my hard drive!

In fact, reasons where I've triggered out of disk alerts include:

- The WAL directory was too large (the dreaded failing replication slot).

- The log file directory not properly rotating.

- Too much junk data being inserted and removed into a table, to the point where a VACUUM FULL was needed.

- Storing a logical backup on the same volume that grew too big (don't worry -- the physical backups were stored separately!)

and probably others that are slipping my mind.

pgMonitor aims to let you know that you are running out of disk before it actually happens, issuing a warning when its at 75% capacity and going critical at 90%. This is an example of alerting before a problem becomes a big problem.

Now, what should you do when you're running out of disk? First, you should figure out if the reason is due to organic growth of your database versus something else. If its due to the organic growth, you may be able to get some space savings with newer versions of Postgres or perhaps some pruning of your data set, though likely you need to expand your volume. Note: we could probably spend a blog post just on managing PostgreSQL data growth, so let's transition into the "something else" category.

If you're running out of disk due to something else, first you should figure out what that something else is. No matter what the problem is, do not manually delete your WAL logs (pg_wal / pg_xlog). Again, this can lead you to have a much worse day than an out of disk error, but allows me to insert my standard reminder to ensure you take backups.

Once you figure out what's taking up so much disk, determine your best strategy to prune it. For example, if you have too many WAL files due to an unacknowledged replication slot, either get the service reconnected to it or drop the slot (and conveniently, pgMonitor comes with an alert for an unacknowledged replication slot!). If your regular log files are too big, compress your older log files and ship them somewhere else (or remove them) and adjust your logging policies. It could also be a combination of things that are causing your out of disk alerts to trigger, so be sure to take a look at your volume and determine what your size outliers are before taking action.

"Why Is This Not Clean?"

Vacuuming is an important PostgreSQL maintenance process. Regular vacuuming keeps your tables clean and prevents issues like transaction ID wraparound. Under normal circumstances, this should be will managed: the PostgreSQL autovacuum process will run as needed and ensure your database stays healthy from a vacuuming perspective.

pgMonitor comes with several alerts to warn you about issues that pertain for vacuuming. One of them is alerting when you have too many "idle in transaction" connections open: as discussed in an earlier article, this condition can lead to transaction ID wraparound. If you see this alert, inspect your connections, e.g. by looking at the pg_stat_activity table and determine if you need to forcibly close them.

If autovacuum is not running, or it cannot keep up with the ongoing changes, pgMonitor will issue an "emergency vacuum" alert. When you receive this alert, you should issue a VACUUM command as soon as possible.

There is also a pgMonitor alert warning if you are getting close to transaction ID wraparound -- much like the above, to help ward that off, run a VACUUM and read this article on guarding against transaction ID wraparound.

Falling Behind the Leader: Replication Lag

The previous articles in this series discussed replication lag, i.e. how far behind a replica (or follower) is behind a primary (or leader). Replicas that are too far behind the primary could create a situation where there is data loss if the primary becomes unavailable and the laggy replica is promoted.

Additionally, based on your setup, replication lag could actually lead to your replica becoming out of sync if WAL archives start to be recycled. In that case, you may need to reprovision your replica. (The Postgres Operator does not have this issue so long as your pgBackRest retention policies are reasonably high).

pgMonitor will alert you when a replica falls too far behind the primary, but even so, how do you react to this? Often times, this problem will self correct: maybe the network was saturated or the replica was slow.

However, if the replica lag keeps steadily increasing: this could be a sign of an unacknowledged replication slot (though there's an alert for that!), a broken connection between primary/replica, the replica itself is down, or that the replica cannot keep up processing transactions from the primary. These are all steps that will require intervention, whether checking the replica's connectivity to potentially increasing the resources to the replica (which in a perfect world, match the resources of the primary).

Running Out Of Numbers: Sequence Exhaustion

This is a cool alert that pgMonitor provides, though I don't envy anyone in this situation. PostgreSQL handles auto-incrementing through sequences, typically via the serial before PostgreSQL 10 and with identity columns (GENERATED AS IDENTITY) since. Most people use regular integers for these sequences as they take up less space and are more performant.

However, if you're storing a lot of data to a table with an integer sequence as the primary key, there is a chance you can run out of integers. When this happens, the sequence becomes exhausted, i.e. it is at its largest value. To remedy this, in the case of an integers, you can recast your sequence to use bigint!

If you're running out of big integers, well, that's a different problem, and in that case, I definitely want to talk to you to find out more about what you're doing!

Conclusion

There are many more things you can set alerts on (and you can read about all of the available alerts that the PostgreSQL Operator has) and there are certainly more troubleshooting strategies you can employ. Regardless, it helps to understand the various issues that can arise in your PostgreSQL cluster, so if you do end up in a critical situation, you have a plan in place to solve it.

Coupled with a solid foundation on what to look for when monitoring your Postgres clusters, alerting and troubleshooting plans will help you keep your databases available and running without issue.

This article is part of a series:

Related Articles

- Logical replication from Postgres to Iceberg

4 min read

- Hacking the Postgres Statistics Tables for Faster Queries

13 min read

- OpenTelemetry Observability in Crunchy Postgres for Kubernetes

8 min read

- Creating Histograms with Postgres

10 min read

- Introducing Crunchy Postgres for Kubernetes 5.8: OpenTelemetry, API enhancements, UBI-9 and More

4 min read