Latest Articles

- Crunchy Data Warehouse: Postgres with Iceberg Available for Kubernetes and On-premises

- Reducing Cloud Spend: Migrating Logs from CloudWatch to Iceberg with Postgres

- Postgres Security Checklist from the Center for Internet Security

- Automatic Iceberg Maintenance Within Postgres

- Postgres Troubleshooting: Fixing Duplicate Primary Key Rows

Production PostGIS Vector Tiles: Caching

3 min readMore by this author

Building maps that use dynamic tiles from the database is a lot of fun: you get the freshest data, you don't have to think about generating a static tile set, and you can do it with very minimal middleware, using pg_tileserv.

However, the day comes when it's time to move your application from development to production, what kinds of things should you be thinking about?

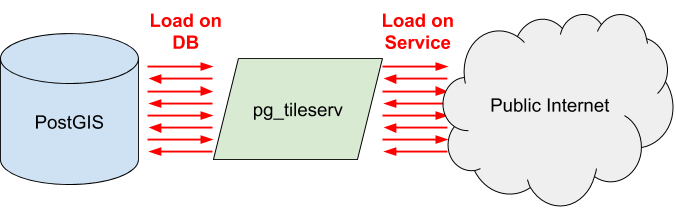

Let's start with load. A public-facing site has potentially unconstrained load. PostGIS is fast at generating vector tiles.

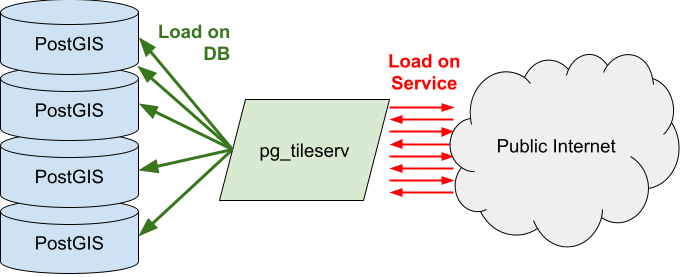

One way to deal with load is to scale out horizontally, using something like the postgres operator to control auto-scaling. But that is kind of a blunt instrument.

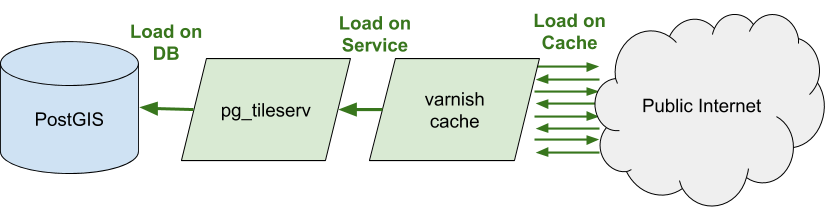

A far better way to insulate from load is with a caching layer. While building tiles from the database offers access to the freshest data, applications rarely need completely live data. One minute, five minutes, even thirty minutes or a day old data can be suitable depending on the use case.

A simple, standard HTTP proxy cache is the simplest solution. Here's an example using just containers and docker compose that places a proxy cache between a dynamic tile service and the public web.

I used Docker Compose to hook together the community pg_tileserv container with the community varnish container to create a cached tile service, here's the annotated file.

First some boiler plate and a definition for the internal network the two containers will communicate over.

version: '3.3'

networks:

webapp:

The services section has two entries. The first entry configures the varnish service, accepting connections on port 80 for tiles and 6081 for admin requests.

Note the "time to live" for cache entries is set to 600 seconds, or five minutes. The "backend" points to the "tileserv" service, on the usual unsecured port.

services:

web:

image: eeacms/varnish

ports:

- '80:6081'

environment:

BACKENDS: 'tileserv:7800'

DNS_ENABLED: 'false'

COOKIES: 'true'

PARAM_VALUE: '-p default_ttl=600'

networks:

- webapp

depends_on:

- tileserv

The second service entry is for the tile server, it's got one port range, and binds to the same network as the cache. Because pg_tileserv is set up with out-of-the-box defaults, we only need to provide a DATABASE_URL to hook it up to the source database, which in this case is an instance on the Crunchy Bridge DBaaS.

tileserv:

image: pramsey/pg_tileserv

ports:

- '7800:7800'

networks:

- webapp

environment:

- DATABASE_URL=postgres://postgres:password@p.uniquehosthash.db.postgresbridge.com:5432/postgres

Does it work? Yes, it does. Point your browser at the cache and simultaneously watch the logs on your tile server. After a quick run of populating the common tiles, you'll find your tile server gets quiet, as the cache takes over the load.

This @mapscaping interview with @pwramsey about pg_tileserv was really useful. Best info? Adding a cache with 60s expiry reduced compute almost to zero (in a previous situation). Thanks, @bogind2! https://t.co/rzyU3DOHdD

— Tom Chadwin (@tomchadwin) January 19, 2021

If your scale is too high for a single cache server like varnish, consider adding yet another caching layer, by putting a content delivery network (CDN) in front of your services.

Related Articles

- Crunchy Data Warehouse: Postgres with Iceberg Available for Kubernetes and On-premises

6 min read

- Reducing Cloud Spend: Migrating Logs from CloudWatch to Iceberg with Postgres

5 min read

- Postgres Security Checklist from the Center for Internet Security

3 min read

- Automatic Iceberg Maintenance Within Postgres

5 min read

- Postgres Troubleshooting: Fixing Duplicate Primary Key Rows

7 min read