Latest Articles

Using PostGIS and pg_featureserv with QGIS

Martin Davis

5 min readMore by this author

My colleague Kat Batuigas recently wrote about using the powerful open-source QGIS desktop GIS to import data into PostGIS from an ArcGIS Feature Service. This is a great first step toward moving your geospatial stack onto the performant, open source platform provided by PostGIS. And there's no need to stop there! Crunchy Data has developed a suite of spatial web services that work natively with PostGIS to expose your data to the web, using industry-standard protocols. These include:

- pg_tileserv - allows mapping spatial data using the MVT vector tile format

- pg_featureserv - publishes spatial data using the OGC API for Features protocol

Recent versions of QGIS support using the OGC API for Features (previously known as WFS3) as a vector data source. So it should be able to source data from pg_featureserv.

Let's see how it works.

Load Data into PostGIS

To keep things simple we are using a Crunchy Bridge cloud-hosted Postgres/PostGIS instance. For demo purposes we'll load a dataset of British Columbia wildfire perimeter polygons (available for download here). The data is provided as a shapefile, so we can use the PostGIS shp2pgsql utility to load it into a table. (If the data was in another format then we could load it using ogr2ogr, or use QGIS itself as Kat described).

We use the -c option to have the loader create a table appropriate for the dataset, and the -I option to create a spatial index on it (always a good idea). The data is in the BC-Albers coordinate system, so we specify the SRID using as -s 3005. Here we are doing the load in two steps using an intermediate SQL file, or it can be done in a single command by piping the shp2pgsql output to psql.

shp2pgsql -c -D -s 3005 -i -I prot_current_fire_polys.shp bc.wildfire_poly > bc_wf.sql

psql -h p.asdfghjklqwertyuiop12345.db.postgresbridge.com -U postgres < bc_wf.sql

Using psql we can connect to the database and verify that the table has been created and loaded:

postgres=# \d bc.wildfire_poly

Table "bc.wildfire_poly"

Column | Type | Collation | Nullable | Default

------------+-----------------------------+-----------+----------+-----------------------------------------------

gid | integer | | not null | nextval('bc.wildfire_poly_gid_seq'::regclass)

objectid | double precision | | |

fire_year | integer | | |

fire_numbe | character varying(6) | | |

version_nu | double precision | | |

fire_size_ | numeric | | |

source | character varying(50) | | |

track_date | date | | |

load_date | date | | |

feature_co | character varying(10) | | |

fire_stat | character varying(30) | | |

fire_nt_id | integer | | |

fire_nt_nm | character varying(50) | | |

fire_nt_lk | character varying(254) | | |

geom | geometry(MultiPolygon,3005) | | |

Indexes:

"wildfire_poly_pkey" PRIMARY KEY, btree (gid)

"wildfire_poly_geom_idx" gist (geom)

postgres=# select count(*) from bc.wildfire_poly;

count

-------

133

Serve PostGIS Data with pg_featureserv

The Crunchy spatial services are lightweight native applications (written in Go), so it's easy to install them on a local or hosted platform. They can be downloaded as applications, a Docker container, or built from source. See the installation guide for details.

We're running pg_featureserv in a local environment. To connect it to the Bridge Postgres instance, we specify the database connection information in the config/pg_featureserv.toml file. The connection string can also be specified in an environment variable. See the documentation for more information.

[Database]

# Database connection

# postgresql://username:password@host/dbname

DbConnection = "postgres://postgres:password@p.asdfghjklqwertyuiop12345.db.postgresbridge.com:5432/postgres"

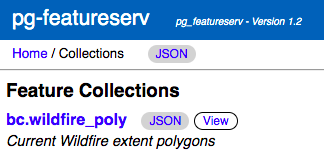

For ease-of-use pg_featureserv provides a browser-based Admin UI. Using this we can see the data table published as a collection:

http://localhost:9000/collections.html

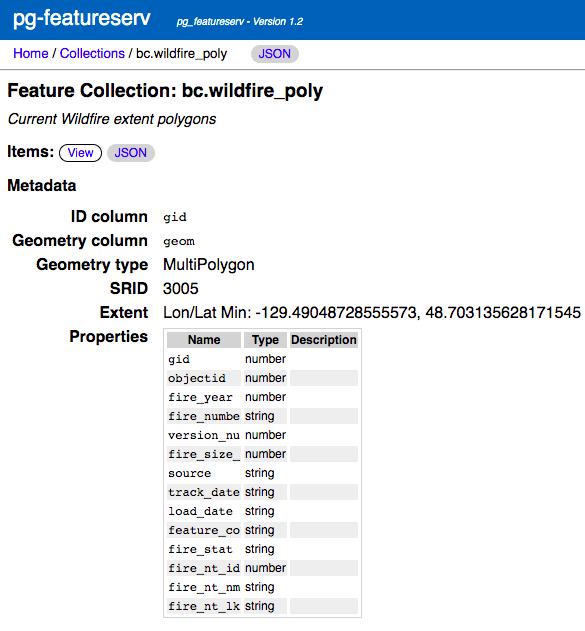

We can display the the collection metadata:

http://localhost:9000/collections/bc.wildfire_poly.html

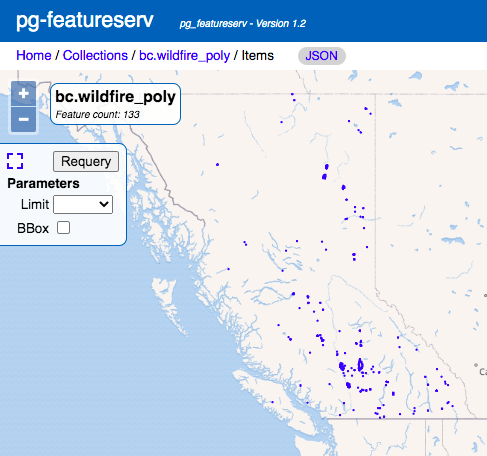

The Admin UI also lets us see the data on a map:

http://localhost:9000/collections/bc.wildfire_poly/items.html?limit=200

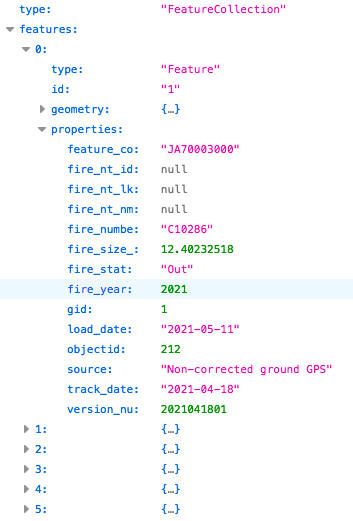

The main use of pg_featureserv is to serve feature data via the OGC API for Features (OAPIF), which is a RESTful HTTP protocol returning GeoJSON. We can verify that a OAPIF data query works by issuing the following request URL. The response is a GeoJSON document (shown here using the handy JSON display in the Firefox browser):

http://localhost:9000/collections/bc.wildfire_poly/items.json

Display pg_featureserv Collection as a QGIS Layer

In QGIS, we can create a layer that displays the data coming from the pg_featureserv instance.

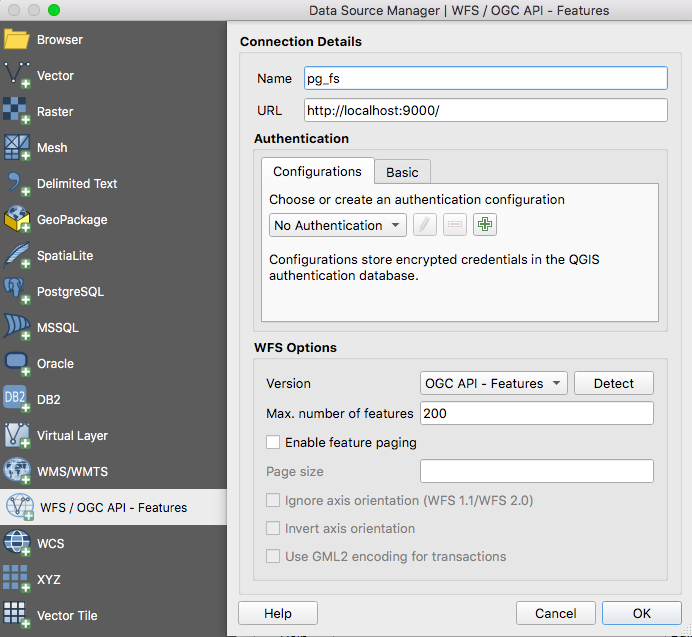

To do this, under the Layer menu choose Add Layer > Add WFS Layer**...**. This displays the Data Source Manager window at the WFS/OGC API-Features tab. Click New to define the connection to the pg_featureserv service. The Connection Details dialog lets us enter the following information:

- We'll use

pg_fsas the name of the connection - The connection URL is the service home endpoint

http://localhost:9000/ - The WFS Version is OGC API - Features

- We'll specify the Max. number of features as 200, since that will allow loading the entire dataset without paging

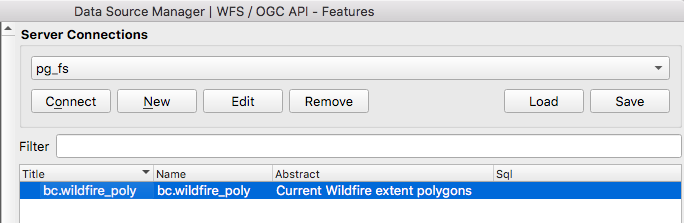

When we click Connect we see the collections published by the service (in this demo there is only one):

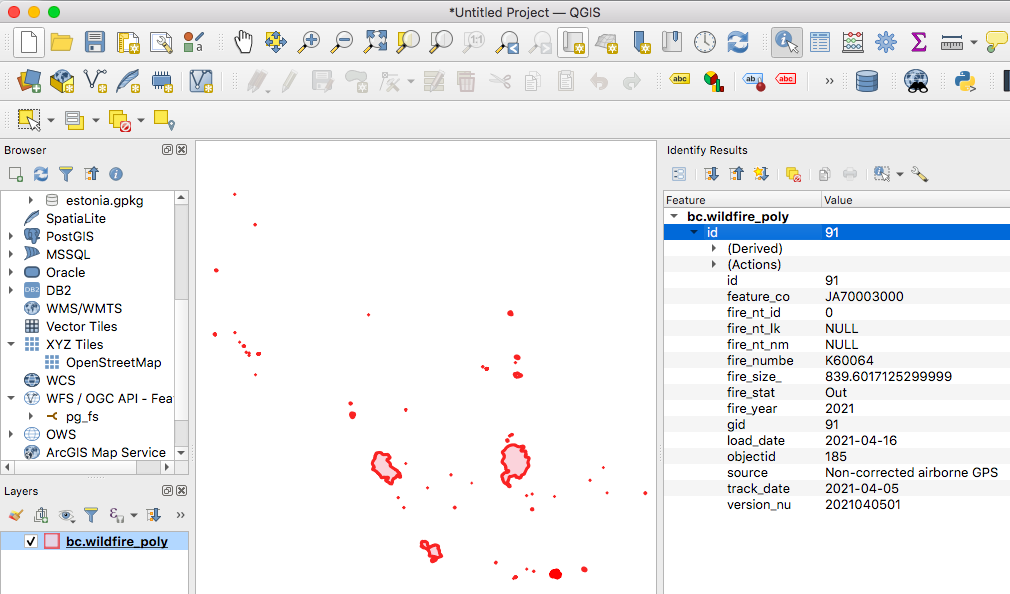

Now we can select the bc.wildfire_poly collection, and click Add to add it as a layer to the QGIS map layout. The screenshot also shows the result of using the Identify Features tool on the map, showing that all attribute data is loaded as well.

Of course, QGIS is able to connect to PostGIS directly, and provides full query and update capability when doing that. But it can be simpler, more flexible and more secure to expose PostGIS data via a web service. That way, it can be easily accessed by many different tools which might not be able to or allowed to connect to PostGIS directly.

We're also exploring ways to be able to run pg_tileserv and pg_featureserv directly in Crunchy Bridge, to provide a turn-key solution for exposing spatial data on the web. If this sounds interesting to you, get in touch!

Related Articles

- Logical replication from Postgres to Iceberg

4 min read

- Hacking the Postgres Statistics Tables for Faster Queries

13 min read

- OpenTelemetry Observability in Crunchy Postgres for Kubernetes

8 min read

- Creating Histograms with Postgres

10 min read

- Introducing Crunchy Postgres for Kubernetes 5.8: OpenTelemetry, API enhancements, UBI-9 and More

4 min read