Latest Articles

- OpenTelemetry Observability in Crunchy Postgres for Kubernetes

- Creating Histograms with Postgres

- Introducing Crunchy Postgres for Kubernetes 5.8: OpenTelemetry, API enhancements, UBI-9 and More

- Crunchy Data Warehouse: Postgres with Iceberg Available for Kubernetes and On-premises

- Reducing Cloud Spend: Migrating Logs from CloudWatch to Iceberg with Postgres

Using Postgres for Statistics: Centering and Standardizing Data

Steve Pousty

8 min readMore by this author

In the last two blog posts on data science in Postgres, we got our data ready for regression analysis and had predictive variables that are on wildly different scales. Another example of data on different scales would be annual income versus age. The former is usually at least tens of thousands while age rarely gets to a hundred.

If you do the regression with non-transformed variables, it becomes hard to compare the effect of the different variables. Statisticians account for this by converting raw data values into a Z-score for each explanatory variable. Other names for this technique are standardizing your data or centering and standardizing. As Wikipedia explains, the formula for a z-score is:

Where:

is the observation is the mean of the sample is the standard deviation of the sample

In continuing with the theme of this series of blog posts (doing all the data science in Postgres), today we are going to examine methods for calculating z-scores for our data in the database. We want to do this transformation because, when we carry out logistic regression we want to be able to compare the effects of the different factors on fire probability. Z-scores allow us to directly compare estimated parameters even if the original data has wildly different scales.

It turns out there are quite a few ways to approach this problem of calculating z-scores in the database, especially when we consider data that is frequently updated. Those techniques and my choice will be the discussion for today’s blog post.

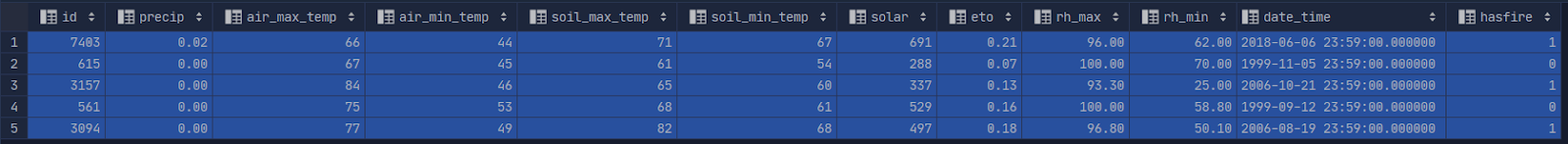

Here is a reminder of the data in the table we are going to use (named verification):

And with that introduction, let’s go ahead and get to it.

Problem Statement

Calculate the Z-score for a selected set of columns. Bonus points awarded if it can do it automatically when new data is entered into the table.

Finding a solution

Some of the many benefits of working at Crunchy Data with so many Postgres experts is the ability to learn and think out loud with some of the best people in the field. I jumped on Slack and posed my scenario.

One of the first responses was “how about a generated column”? And at first glance this seems perfect, a column whose value is a formula that is automatically updated if the columns in the formula change. But then I read the fine print (and cried a little).

There is one main issue that disqualifies generated columns as a solution: the data for the calculation has to be in the inserted or updated row. It can’t depend on outside values, so our need to have the mean and standard deviation for all the data ruins that.

Once I finished mourning my loss of an elegant and simple solution I started looking at other possibilities. The main options for handling the problem are:

- Add the extra transformed columns to the verification table.

- Create wholly new table for the transformed variables.

- Create a view that contains the transformed columns and other columns needed for analysis.

Let’s look at some of the trade-offs with each approach.

Transformed columns into original table

Benefits

One of the main benefits of this solution is that all the data stays together. I have the Z-scores for each variable right next to the original columns. If I want to explore the relationship between the response variable and the non-transformed data I can do that as well.

This solution is also easy to conceptualize and simple to implement. All I need to do is create some new NUMERIC columns and then use the Z formula above to calculate new values to update the table. For the first operation I can run these in one easy step.

Drawbacks

The biggest drawback to this approach is the need to create a trigger that updates all of the rows when a new row is added to the table. We need to update all the rows because the new values will alter the global average and standard deviation. We have the added complication of writing a trigger. In addition, this is an expensive operation, especially if we are doing frequent insertions or our table starts to become really large.

If we batch up our inserts to occur during slow times on the Postgres server, we could actually set up a cron job once a day to carry out the work. This is simpler to do than a trigger and mitigates some of the resource burden this operation takes on other users. With this approach, we would have stale data. The viability of this solution depends on how often you get inserts and how fresh you need the data.

Another drawback is that it makes our table a bit more unwieldy. If we just put the Z value columns and the response variable it is easier to navigate and deal with listing columns. There is less chance of accidentally using the wrong formula in the analysis.

Wholly New Table for Z-scores

I'm not going to really go into benefits and drawbacks. The only difference between this option and the one above is that the new table is cleaner in terms of separation of data. Other than that, I would still have to write a trigger that updated the new table whenever a new row was inserted or a data value changed in an existing row. I ruled this out pretty quickly.

Creating a view

Benefits

There are a couple of benefits to doing this in a view. The most important benefit being that since views calculate data only when queried, results in the view are always up to date. This would not be the case for a materialized view but then again we can set a cron job to periodically update the view.

Another benefit of a view is that we can have just the Z-scores in the view, giving us the advantage of the wholly new table, without the storage overhead. This would cut down on storage space needed for both the database and for any backup of the data.

And, just like a wholly new table, we can have different permissions on the view than we have on the table, allowing us to ensure that data analysts with read permissions can’t accidentally ruin the data as well as never giving them access to the raw scores.

Drawbacks

The main disadvantage to a view based solution is that every time someone queries the view, Postgres has to do all the calculations. Every Z calculation involves two aggregate calculations and one single calculation PER ROW IN THE TABLE. This means that as the number of rows increases we would see a greater and greater CPU and disk load per execution of the view.

This problem may seem like the same issue affecting the trigger on Transformed Columns in the Table, but in the case of the view, we trade freshness of results for computation. The extra drawback with the view is that use of the view will probably be increased during times when the database is being heavily taxed by other queries against the database. With the trigger the calculation only happens on insert, which can give more flexibility.

A middle way

Again, since I get to work at Crunchy Data, Stephen Frost chimed in with an elegant solution. Create a new table, named aggregates in this example, which holds just the average and standard dev for each variable. Then, since the most expensive part of the view is the aggregations, use the trigger on the original table to update the averages and standard deviations in aggregations.

Now when creating a view, I can use the fixed values in aggregations for the z-score calculation. Calculating the Z-scores in the view becomes a simple subtraction and division for each row, which is MUCH less computationally intensive.

We get the best of both worlds, up to date data with a much lower computational load!

Which did I choose?

Unfortunately, Stephen chimed in well after I was on my way to doing the calculations in a new column in the table. My reasons for choosing this solutions were:

- It was simpler to conceptualize.

- I understood all the pieces needed to do it.

- I was not going to be adding any new data to the project in the foreseeable future.

- I needed to get this part of the project done.

If I were going to do this project in production or where real money and lives were on the line, I would implement Stephen’s solution. After doing that I would probably do some benchmarking to see the difference between the straight view and the middle way of calculating the view.

Wrap up

Today we chatted about a lot of considerations a data scientist or app developer might need to consider when having a calculated value in their data. There is no “one true way”, so I presented some of the drawbacks to benefits to each approach. I hope this will be helpful to you next time you come across this type of problem.

In the next post in this series I will show you how I went about calculating all the new columns. It involved some fun usage of PL/pgSQL.

Do you have questions or feedback on my approach? Do you have a lot of experience to share about calculated columns? If so, I would love it if you could send a note to the Crunchy Data Twitter account. Happy calculations!

Related Articles

- OpenTelemetry Observability in Crunchy Postgres for Kubernetes

8 min read

- Creating Histograms with Postgres

10 min read

- Introducing Crunchy Postgres for Kubernetes 5.8: OpenTelemetry, API enhancements, UBI-9 and More

4 min read

- Crunchy Data Warehouse: Postgres with Iceberg Available for Kubernetes and On-premises

6 min read

- Reducing Cloud Spend: Migrating Logs from CloudWatch to Iceberg with Postgres

5 min read