Troubleshooting PostgreSQL Streaming Replication Synchronization

Jonathan S. Katz

9 min readMore by this author

Having replicas in a database environment can fulfill many goals: create a high-availability environment, load balance read-only queries across several nodes, have a read-only database to run analytical queries on, and more. PostgreSQL introduced streaming replication in PostgreSQL 9.0 almost 8 years ago to help facilitate satisfying the above requirements, and through the years, the community has added many features to continue to enhance the replication user experience.

There are many different ways to configure streaming replication in PostgreSQL, but fundamentally you need to consider if you want to have asynchronous or synchronous replication. With asynchronous replication, the default in PostgreSQL, a transaction is considered complete when it is committed on the primary database; the replica databases will replay and consume the transaction on their own time. With synchronous replication, the transaction will not be considered complete until it has been committed on all of the replicas (though PostgreSQL 10 introduced “quorum commit” which allows you to configure how many replicas need to commit the transaction before it’s considered complete).

Choosing which form of replication you need for your environment is out-of-scope for this article. Rather, we will look at what happens when a replica becomes out-of-sync with a primary, what to do, and how you can avoid this situation. Credits to Doug Hunley for originally compiling this guide.

Detecting An Out-of-Sync Replica

To throw in a slight editorial, I still remember the nightmares I had the first time I saw an error message like this almost 8 years ago:

ERROR: requested WAL segment 00000001000000340000005A has already been removed

When I began to inspect my cluster, I noticed that my replica server was no longer streaming information from my primary.

The way that replication in PostgreSQL works is that the replicas load changes from the write-ahead log (WAL) files generated by the primary, which are guaranteed to be in order. The primary is configured to keep a certain number of WAL files (more on this in a bit) and thus if it removes a log before the replica has a chance to load it, then the replica may not be able to load it and thus become out-of-sync.

What Causes A Replica To Go Out-of-Sync

There are several reasons why a replica can become out-of-sync from your primary database:

- The replica has not communicated with the primary for a while, due to a network issue or the replica being down

- The primary database is writing changes faster than the replica can process the changes

- Something changed in your primary or replica configuration that is causing the WAL logs to either not be shipped or processed

In my specific situation, what happened was I was replicating from a bare metal server with lots of resources to a lower powered virtualized instance.

WAL files are written to a directory named “pg_xlog” prior to PostgreSQL 10, and “pg_wal” from PostgreSQL 10 and onward. PostgreSQL determines how many WAL files to keep based upon a few settings.

For all supported versions of PostgreSQL:

- wal_keep_segments: the minimum number of WAL files to store on the primary. A higher number decreases the risk of a standby becoming out of sync, but requires more disk space on the primary.

For PostgreSQL 9.5 and later:

- max_wal_size: the maximum combined size of all WAL logs to remain on disk before the changes are written to the data files (a “checkpoint”). This limit can be exceeded under a few circumstances, e.g. having a high “wal_keep_segments” setting.

For PostgreSQL 9.4 and earlier:

- checkpoint_segments: the maximum number of WAL files before the changes to the database are written to the data files (a “checkpoint”). Once the WAL files are flushed, they can be removed. This parameter is often tuned for performance; a higher number might mean more WAL files are potentially kept around longer.

Later we will discuss a feature added in PostgreSQL 9.4 that makes it easier to keep your replicas in sync.

Fixing An Out-of-Sync Replica: The Good & The Ugly

Remember when you first set up your PostgreSQL replica that you had to perform what is known as a “base backup” to get the baseline state of your database, and subsequently replay all of the WAL logs written since the backup began. If your database is large, this process takes some time.

Under a lot of circumstances, you will need to rebuild your replica using the above method, which could be painful. However, based on your disaster recovery policies, you may be able to avoid a full replica rebuild.

The Good Scenario: Loading From Your Archive

If you use a PostgreSQL backup tool, such as pgBackrest, you will setup something called “continuous archiving,” which stores all of your WAL files to a specified location (and hopefully a separate disk from your primary database). Typically your disaster recovery setup will include a retention policy that specifies how much data you want to keep (e.g. 14 days).

If your replica is out-of-sync but continuous archiving is enabled on your primary (i.e. archive_mode = on), first test to see if the “archive_command” is still working. Checking this command varies based upon which backup tool or script you are using: for example, pgBackRest has its own check command. If the “archive_command” is not working, you will need to perform a full replica rebuild, which is described below.

If your “archive_command” is working normally, go to your replica and inspect the recovery.conf file, which you set up when the replica was first created. Check to see if you have set up a restore_command in the file; the “restore_command” is used for point-in-time-recovery but can also be used as an alternative to streaming replication by creating what is known as a hot standby.

If a restore_command is set up, test to see if it is working, and if it is not, fix it and restart your replica PostgreSQL instance. If you do not have a “restore_command” set up, follow the directions in your backup utility for setting up a “restore_command” and restart your PostgreSQL replica instance. If your archive contains the WAL file that the primary reported as missing, it should be able to replay all of the changes on the replica and become back in sync.

If the WAL file is missing from the archive or if continuous archiving is not enabled (“archive_mode = off”) you will have to rebuild your replica from a base backup.

The Ugly Scenario: Rebuilding Your Replica From a Base Backup

At this point, you will need to rebuild your replica from a base backup. If you are using a backup tool such as pgBackRest, you can follow its instructions on how to do a full restore which you have the option to parallelize as well. Ensure that you have your “postgresql.conf” and “recovery.conf” set up to indicate that this instance is a replica.

If you are not using a backup tool, you can rebuild your instance using the pg_basebackup tool that comes with PostgreSQL. To do this, first you need to shut down your PostgreSQL replica instance. You then need to remove your PostgreSQL data directory on the replica (do not remove the data directory on the primary!). If your replica’s configuration files are within the PostgreSQL data directory, consider making a copy of them before removing the data directory.

As the PostgreSQL cluster owner (e.g. the postgres system user) and substituting $PGDATA is the path to your data directory, remove the data directory:

export PGDATA="/path/to/pgdata/directory"

rm -rfv $PGDATA/*

and then perform the base backup:

pg_basebackup -D $PGDATA -R -Xs -c fast -P -v

with any other connection setting flags needed to connect to the primary server. The above flags perform the following:

-D $PGDATAindicates the data directory to restore to-Rcreates the recovery.conf file with the appropriate settings. You may still want to override some of these settings based on your previous configuration-Xstells pg_basebackup to send any WAL files that are written to the primary to the replica while the backup is being performed, to help make it easier to replay all of the log files when the base backup is complete-c fasttells pg_basebackup to issue checkpoints immediately, i.e. all the changes in a WAL file are immediately written to the data tables-Pdisplays the progress of the restore-vturns on "verbose" mode

Once pg_basebackup completes, start your replica PostgreSQL instance. If the rebuild was successful, your PostgreSQL log should contain messages similar to:

LOG: entering standby mode

LOG: redo starts at A/4E000028

LOG: consistent recovery state reached at A/4E005500

LOG: started streaming WAL from primary at A/4F000000 on timeline 1

Never Again: Using Replication Slots to Prevent Replica Desynchronization

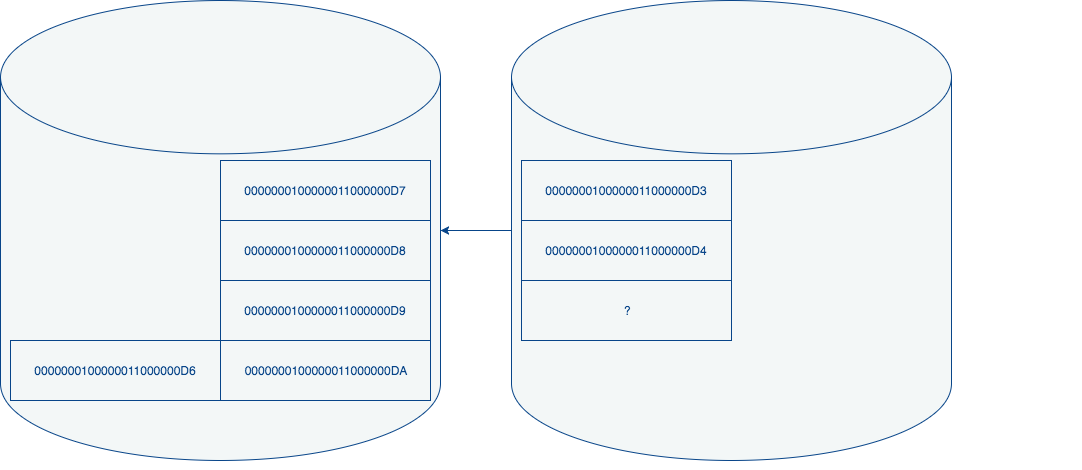

Replication slots were introduced in PostgreSQL 9.4 to help ensure a primary PostgreSQL instance does not remove WAL files before a replica has received the file. A replication slot keeps track of the last LSN (log sequence number) that its connected replica has received. Every time a replica successfully receives a WAL file, it lets the primary know it can update its LSN.

To create a replication slot on a primary database, as a database user that has the “replication” privilege (or a database superuser) run the following command:

SELECT * FROM pg_create_physical_replication_slot('replica1');

Once you have created a replication slot, all WAL files that have not been delivered to a replica will be saved to the disk.

On your replica, you will need to update your “recovery.conf” file with the following directive:

primary_slot_name = ‘replica1'

After you have saved this update, you will need to restart your replica PostgreSQL instance.

If you decide to permanently shut down the replica, you can remove the replication slot with the following command:

SELECT * FROM pg_drop_replication_slot('replica1');

You can check on the status of your replication slot from the primary PostgreSQL instance by running the following command:

SELECT * FROM pg_replication_slots;

Replication Slots Are Almost Turnkey, But...

While replication slots solve the “replication desynchronization” problem, they could potentially create a new problem: filling up your primary disk.

As mentioned earlier, PostgreSQL will keep all the WAL files on disk until all replicas using slots have acknowledged that they have received the changes (note that an individual slot can only be used by one replica). If a replica goes down for an extended period of time, the amount of saved WAL files could grow to the point where you run out of disk space.

To prevent this issue, you should ensure that you are actively monitoring how much of your disk is consumed and see if your available disk is suddenly growing very rapidly: that could be a sign that one of your replicas is disconnected. You should also be monitoring your replicas to ensure that they are up and connected to the primary database.

Regardless of whether or not you use replica slots, you should at a minimum be monitoring how much disk space is being consumed as that number is an integral part of managing a database.